|

SG++-Doxygen-Documentation

|

|

SG++-Doxygen-Documentation

|

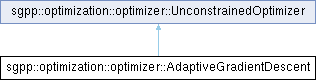

Gradient descent with adaptive step size. More...

#include <AdaptiveGradientDescent.hpp>

Static Public Attributes | |

| static constexpr double | DEFAULT_LINE_SEARCH_ACCURACY = 0.01 |

| default line search accuracy | |

| static constexpr double | DEFAULT_STEP_SIZE_DECREASE_FACTOR = 0.5 |

| default step size decrease factor | |

| static constexpr double | DEFAULT_STEP_SIZE_INCREASE_FACTOR = 1.2 |

| default step size increase factor | |

| static constexpr double | DEFAULT_TOLERANCE = 1e-6 |

| default tolerance | |

Static Public Attributes inherited from sgpp::optimization::optimizer::UnconstrainedOptimizer Static Public Attributes inherited from sgpp::optimization::optimizer::UnconstrainedOptimizer | |

| static const size_t | DEFAULT_N = 1000 |

| default maximal number of iterations or function evaluations | |

Protected Attributes | |

| double | rhoAlphaMinus |

| step size decrease factor | |

| double | rhoAlphaPlus |

| step size increase factor | |

| double | rhoLs |

| line search accuracy | |

| double | theta |

| tolerance | |

Protected Attributes inherited from sgpp::optimization::optimizer::UnconstrainedOptimizer Protected Attributes inherited from sgpp::optimization::optimizer::UnconstrainedOptimizer | |

| std::unique_ptr< base::ScalarFunction > | f |

| objective function | |

| std::unique_ptr< base::ScalarFunctionGradient > | fGradient |

| objective function gradient | |

| std::unique_ptr< base::ScalarFunctionHessian > | fHessian |

| objective function Hessian | |

| base::DataVector | fHist |

| search history vector (optimal values) | |

| double | fOpt |

| result of optimization (optimal function value) | |

| size_t | N |

| maximal number of iterations or function evaluations | |

| base::DataVector | x0 |

| starting point | |

| base::DataMatrix | xHist |

| search history matrix (optimal points) | |

| base::DataVector | xOpt |

| result of optimization (location of optimum) | |

Gradient descent with adaptive step size.

| sgpp::optimization::optimizer::AdaptiveGradientDescent::AdaptiveGradientDescent | ( | const base::ScalarFunction & | f, |

| const base::ScalarFunctionGradient & | fGradient, | ||

| size_t | maxItCount = DEFAULT_N, |

||

| double | tolerance = DEFAULT_TOLERANCE, |

||

| double | stepSizeIncreaseFactor = DEFAULT_STEP_SIZE_INCREASE_FACTOR, |

||

| double | stepSizeDecreaseFactor = DEFAULT_STEP_SIZE_DECREASE_FACTOR, |

||

| double | lineSearchAccuracy = DEFAULT_LINE_SEARCH_ACCURACY |

||

| ) |

Constructor.

| f | objective function |

| fGradient | objective function gradient |

| maxItCount | maximal number of function evaluations |

| tolerance | tolerance |

| stepSizeIncreaseFactor | step size increase factor |

| stepSizeDecreaseFactor | step size decrease factor |

| lineSearchAccuracy | line search accuracy |

| sgpp::optimization::optimizer::AdaptiveGradientDescent::AdaptiveGradientDescent | ( | const AdaptiveGradientDescent & | other | ) |

Copy constructor.

| other | optimizer to be copied |

|

override |

Destructor.

|

overridevirtual |

| [out] | clone | pointer to cloned object |

Implements sgpp::optimization::optimizer::UnconstrainedOptimizer.

References clone().

Referenced by clone().

| double sgpp::optimization::optimizer::AdaptiveGradientDescent::getLineSearchAccuracy | ( | ) | const |

References rhoLs.

| double sgpp::optimization::optimizer::AdaptiveGradientDescent::getStepSizeDecreaseFactor | ( | ) | const |

References rhoAlphaMinus.

| double sgpp::optimization::optimizer::AdaptiveGradientDescent::getStepSizeIncreaseFactor | ( | ) | const |

References rhoAlphaPlus.

| double sgpp::optimization::optimizer::AdaptiveGradientDescent::getTolerance | ( | ) | const |

References theta.

|

overridevirtual |

Pure virtual method for optimization of the objective function.

The result of the optimization process can be obtained by member functions, e.g., getOptimalPoint() and getOptimalValue().

Implements sgpp::optimization::optimizer::UnconstrainedOptimizer.

References alpha, sgpp::base::DataVector::append(), sgpp::base::DataMatrix::appendRow(), sgpp::optimization::optimizer::UnconstrainedOptimizer::f, sgpp::optimization::optimizer::UnconstrainedOptimizer::fGradient, sgpp::optimization::optimizer::UnconstrainedOptimizer::fHist, sgpp::optimization::optimizer::UnconstrainedOptimizer::fOpt, sgpp::base::Printer::getInstance(), sgpp::base::DataVector::l2Norm(), sgpp::optimization::optimizer::UnconstrainedOptimizer::N, sgpp::base::Printer::printStatusBegin(), sgpp::base::Printer::printStatusEnd(), sgpp::base::Printer::printStatusUpdate(), sgpp::base::DataMatrix::resize(), rhoAlphaMinus, rhoAlphaPlus, rhoLs, theta, sgpp::base::DataVector::toString(), sgpp::optimization::optimizer::UnconstrainedOptimizer::x0, sgpp::optimization::optimizer::UnconstrainedOptimizer::xHist, and sgpp::optimization::optimizer::UnconstrainedOptimizer::xOpt.

| void sgpp::optimization::optimizer::AdaptiveGradientDescent::setLineSearchAccuracy | ( | double | lineSearchAccuracy | ) |

| lineSearchAccuracy | line search accuracy |

References rhoLs.

| void sgpp::optimization::optimizer::AdaptiveGradientDescent::setStepSizeDecreaseFactor | ( | double | stepSizeDecreaseFactor | ) |

| stepSizeDecreaseFactor | step size decrease factor |

References rhoAlphaMinus.

| void sgpp::optimization::optimizer::AdaptiveGradientDescent::setStepSizeIncreaseFactor | ( | double | stepSizeIncreaseFactor | ) |

| stepSizeIncreaseFactor | step size increase factor |

References rhoAlphaPlus.

| void sgpp::optimization::optimizer::AdaptiveGradientDescent::setTolerance | ( | double | tolerance | ) |

| tolerance | tolerance |

References theta.

|

staticconstexpr |

default line search accuracy

|

staticconstexpr |

default step size decrease factor

|

staticconstexpr |

default step size increase factor

|

staticconstexpr |

default tolerance

|

protected |

step size decrease factor

Referenced by getStepSizeDecreaseFactor(), optimize(), and setStepSizeDecreaseFactor().

|

protected |

step size increase factor

Referenced by getStepSizeIncreaseFactor(), optimize(), and setStepSizeIncreaseFactor().

|

protected |

line search accuracy

Referenced by getLineSearchAccuracy(), optimize(), and setLineSearchAccuracy().

|

protected |

tolerance

Referenced by getTolerance(), optimize(), and setTolerance().